The initial way of setting up an airflow environment is usually Standalone. The way in which this platform is deployed in terms of its architecture makes it scalable and cost-efficient. Numerous predefined operators are available and you can even develop your own operators if necessary. In the scheduler there is a mechanism called Exectutor that has the function of scheduling the tasks to be executed by the workers (work nodes) that are part of the DAG.Įach task constitutes a node within the network and is defined as an operator. Apache Airflow is mainly composed of a webserver that is used as a user interface and a scheduler, in charge of scheduling the executions and checking the status of the tasks belonging to the described acyclic graph. The use of directed acyclic graphs ( DAG) makes the automation of an ETL pipeline run. This series of processes must be automated and orchestrated according to needs with the aim of reducing costs, speeding up processes and eliminating possible human errors.Īmong the free software alternatives available for workflow orchestration is Apache Airflow, where we can plan and automate different pipelines. One of the work processes of a data engineer is called ETL (Extract, Transform, Load), which allows organisations to have the capacity to load data from different sources, apply an appropriate treatment and load them in a destination that can be used to take advantage of business strategies.

AIRFLOW KUBERNETES WORKER HOW TO

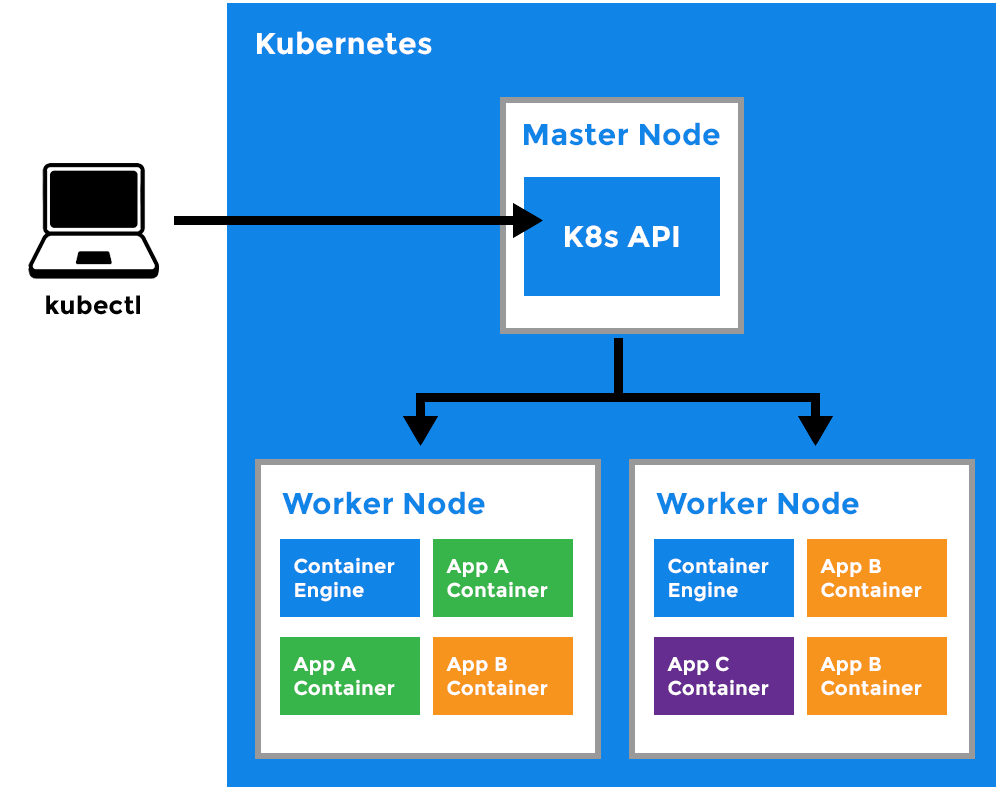

How to deploy the Apache Airflow process orchestrator on Kubernetes Apache Airflow

0 kommentar(er)

0 kommentar(er)